Understanding ETL Pipelines in Data Analysis

ETL Pipeline Briefly Summarized

- ETL stands for Extract, Transform, Load, a process used to integrate data from multiple sources.

- An ETL pipeline is a set of processes that move data from one or more sources to a target system like a data warehouse.

- The transformation stage involves cleaning, aggregating, and preparing data for analysis.

- ETL pipelines are essential for data warehousing, business intelligence, and data analytics.

- Tools and technologies for ETL range from simple scripts to complex platforms like Pipeline Pilot and cloud services.

The term "ETL Pipeline" is a cornerstone in the field of data analysis, referring to the sequence of processes that enable the movement and transformation of data from its source to a storage system where it can be analyzed and utilized for business intelligence. This article will delve into the intricacies of ETL pipelines, their importance, and how they are implemented in various sectors.

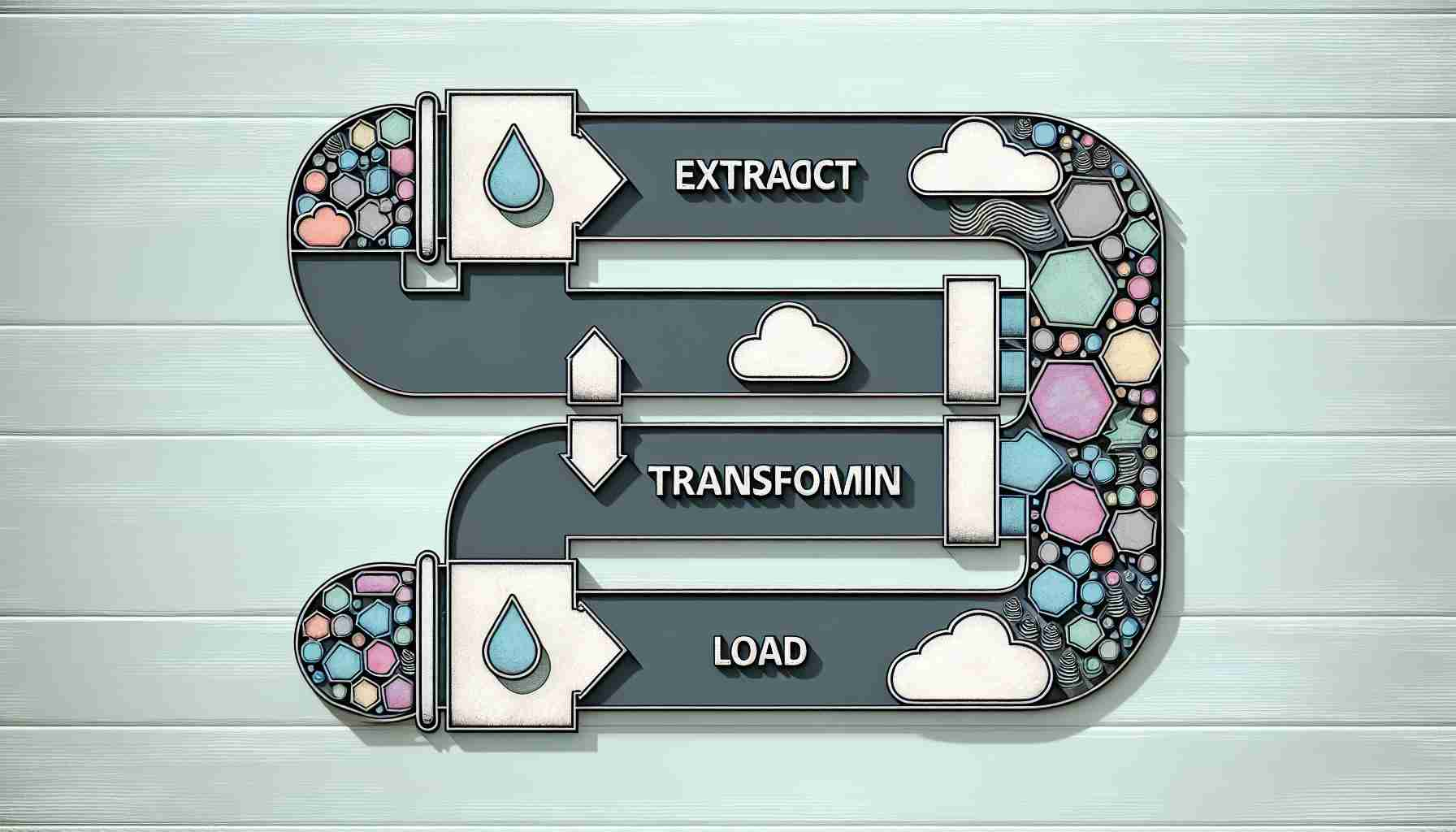

Introduction to ETL Pipelines

An ETL pipeline is a series of processes that extract data from various sources, transform it into a format suitable for analysis, and load it into a destination such as a database, data warehouse, or a data lake. The ETL process is critical for businesses that rely on data-driven decision-making, as it ensures that the data is accurate, consistent, and readily available for analysis.

The ETL Process Explained

Extraction

The first step in an ETL pipeline is to extract data from its original sources. These sources can be diverse, ranging from databases and CRM systems to flat files and web services. The goal is to retrieve all necessary data without affecting the source systems' performance.

Transformation

Once the data is extracted, it undergoes transformation. This step is crucial as raw data often contains inconsistencies, errors, or irrelevant information. Transformation processes may include:

- Cleaning: Removing inaccuracies or correcting values.

- Normalization: Standardizing data formats.

- Deduplication: Eliminating duplicate records.

- Aggregation: Summarizing detailed data for analysis.

- Enrichment: Enhancing data with additional information.

Loading

The final step is loading the transformed data into the target system. This could be a data warehouse designed for query and analysis or any other form of data repository. The loading process must ensure that the data is stored efficiently and securely, maintaining its integrity and accessibility.

Why ETL Pipelines are Essential

ETL pipelines are vital for organizations to make sense of the vast amounts of data they collect. They enable businesses to:

- Consolidate data from multiple sources into a single repository.

- Ensure data quality and consistency, which is crucial for accurate analytics.

- Save time and resources by automating data integration processes.

- Support data governance and compliance efforts by providing traceable data lineage.

Tools and Technologies for ETL

There is a wide array of tools available for ETL, from simple scripting languages like Python to sophisticated platforms like Pipeline Pilot, which offers a graphical user interface for designing data workflows. Cloud-based ETL services like Snowflake, Databricks, and Informatica provide scalable solutions for businesses of all sizes.

Building an ETL Pipeline

Building an ETL pipeline involves several steps:

- Define the Data Sources: Identify all the data sources that will feed into the pipeline.

- Map the Data Flow: Outline how data will move from the sources through the transformation steps to the target system.

- Design the Transformation Logic: Determine the rules and operations that will be applied to the data.

- Implement the Pipeline: Use ETL tools or custom code to create the pipeline.

- Test and Validate: Ensure the pipeline works as intended and that the data is accurate and reliable.

- Monitor and Maintain: Regularly check the pipeline's performance and update it as necessary.

Challenges and Best Practices

ETL pipelines can be complex and may face challenges such as:

- Handling large volumes of data efficiently.

- Ensuring data security during the ETL process.

- Dealing with changes in source data structures or formats.

To address these challenges, it is important to:

- Plan thoroughly before building the pipeline.

- Employ robust error handling and logging mechanisms.

- Optimize performance through techniques like parallel processing.

- Keep security at the forefront, especially when dealing with sensitive data.

Conclusion

ETL pipelines are the backbone of data analysis and business intelligence. They enable organizations to harness their data's potential by ensuring it is clean, consistent, and ready for analysis. With the right tools and practices, ETL pipelines can provide a competitive edge by unlocking valuable insights from data.

FAQs on ETL Pipeline

Q: What does ETL stand for? A: ETL stands for Extract, Transform, Load.

Q: Why is the transformation stage important in an ETL pipeline? A: The transformation stage is crucial because it ensures the data is clean, consistent, and formatted correctly for analysis, which directly impacts the accuracy of data-driven decisions.

Q: Can ETL pipelines handle real-time data processing? A: While traditional ETL pipelines are batch-oriented, modern ETL tools and platforms can handle real-time data processing, often referred to as streaming ETL.

Q: What are some common tools used for ETL? A: Common ETL tools include Informatica, Talend, Apache NiFi, and cloud-based services like AWS Glue and Azure Data Factory.

Q: How do data pipelines differ from ETL pipelines? A: Data pipelines refer to the broader concept of moving data from one system to another, which may include real-time data streams. ETL pipelines specifically involve the extract, transform, and load processes and are often associated with batch processing.

Q: Is coding required to build an ETL pipeline? A: While coding can be used to build custom ETL pipelines, many modern ETL tools offer graphical interfaces that allow users to design pipelines without writing code.

Sources

- Pipeline Pilot

- What is an ETL Pipeline? | Snowflake

- A Complete Guide on Building an ETL Pipeline for Beginners

- What is an ETL Pipeline? Compare Data Pipeline vs ETL - Qlik

- What is ETL? - Databricks

- Building an Efficient ETL Pipeline using Python: A Step-by-Step Guide

- What Is An ETL Pipeline? Examples & Tools (Guide 2024) | Estuary

- How to build an ETL pipeline with Python - YouTube

- What Is An ETL Pipeline? | Informatica

- Data Pipeline vs ETL Pipeline: What is The Difference? | Astera

- Data pipeline vs. ETL: How are they connected? - Fivetran